- Haven't finished grading your revisions or homeworks yet -- I'll have them by Thursday.

- Any questions on project 2?

- I have assigned a new homework, on DEs. This will be due next week, Friday am. So get your project done first, but don't forget your homework.

- Andy's

"DEs in a Day" page.

- Euler's method:

- Our authors start (p. 316) with

\[

Y'(t)=f(t,Y(t))

\]

with initial condition

\[

Y(a)=\eta

\]

I may be making a few references to that page as we go along. Let's start with this one (about what we're actually solving, or attempting to solve).

The upshot is expressed well in the authors' quote that "...the numerical solution values $y_{n-1}$ and $y_n$ do not lie on the true solution. In fact, they do not even lie on the same solution."

-

Let's pick up then on p. 321. Euler's method is the simplest, sanest

starting point, and it can be derived in two different ways:

- By integrating (p. 321), or

- As a tangent line approximation (Figure 8.10, p. 322) -- which, by the way, is the same as using a Taylor series.

- The method as a recursive algorithm is highlighted on

p. 323 (box equation). Note that the step-size $h_n$ is also a function of

$n$ and can vary. So you

might start thinking about adaptive step-size control right away....

- Let's have a look at the code on p. 323.

- Let's do a few steps of Euler by hand, with the differential equation $f'(x)=f(x)$, with initial condition $f(0)=0$. Use five steps, on the interval [0,1].

- Then we'll flip over to the code and see how it looks... You'll have to adapt the first example, which is described on p. 323. Let's have a look at that one first.

- An example for the non-autonomous equation

$y'(t){=}\sin(t) y(t)$

- We can solve this one analytically by separation of variables.

- Let's compare some numerical solutions.

- How should you feel about your numerical solution, if you didn't have the exact solution to compare them to?

- The obvious question is this: "How should we choose the step size(s)?"

- Now, as usual, we want to talk about errors (a function of

step-size). Let's face it: we're clearly making errors.

Start by taking a peak at Figure 8.13, p. 325. It illustrates everything that we want to consider.

Our author distinguishes three types of error: in this figure there are two,

- local discretization error, and

- global discretization error.

I want to prove a result which gives a bound on the error we're making (ignoring rounding error).

Compare this to the result at the bottom of p. 326. They claim that the global discretization error is proportional to $(b-a)h$. (We're in the ballpark!)

- However Making

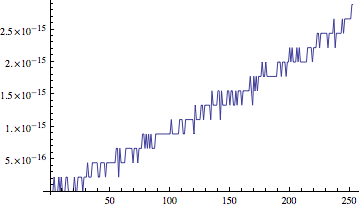

h small may not be a good idea if we add in the rounding error.

\[ |y(t_i)-u_i| \le \frac{1}{L}\left(\frac{hM}{2}+\frac{\delta}{h}\right) \left[e^{L(ih)}-1\right] + |\delta_0|e^{L(ih)} \]

The neat thing is that we can arrive at an optimal $h$ to reduce the error at the end of interval, when $i=N$, to below some given small error target $\epsilon$:

\[ |y(b)-u_N| \le \frac{1}{L}\left(\frac{hM}{2}+\frac{\delta}{h}\right) \left[e^{L(b-a)}-1\right] + |\delta_0|e^{L(b-a)} < \epsilon \]

provided we can bound the second derivative and the rounding errors:

$h=\sqrt{\frac{2\delta}{M}}$ Questions:

- Why does this make sense?

- Will this choice get us below $\epsilon$?

- Our authors start (p. 316) with

\[

Y'(t)=f(t,Y(t))

\]

with initial condition

\[

Y(a)=\eta

\]

- Now on to higher-order Taylor methods

- Euler's method is an example of a Taylor method -- it's the first

in a series (we might call it "Taylor-1"). So it's our introduction to

Taylor methods, which we can think of as generalizations of Euler's method.

In particular, we generalize the formula on p. 323,

$y_{n+1}=y_{n}+hf(t_{n},y_n)$ to the more general formula

$y_{n+1}=y_{n}+h\phi(t_{n},y_n;h)$ Note the parameter $h$ in the $\phi$ function: $\phi$ will generally be a function of $h$. We think of it as an improved derivative calculation -- but it's just based on information at the $n^{th}$ time step. So these are "first-order" methods -- they take into account only the preceeding time step.

- As we saw last time, Euler can be derived as the tangent line

approximation to solution of the ODE. We could just as easily pass a

quadratic through the point $(t_i,y(t_i))$ that

interpolates the slope and the curvature (2nd derivative) as

well (Figure 8.19, p. 340). This is the idea behind Taylor-2.

And so on! That's the idea of the higher-order Taylor methods.

The name "Taylor-2" suggests that we can simply derive these methods from Taylor's theorem, and that's what I'd like to show now.

Let's have a look at a derivation with a slightly different emphasis from that in our text (our authors want to avoid partial derivatives, but I don't!:).

Examples:

- Using successively higher Taylor Methods

This code is not optimized for approximation, but shows the dependence of each success step on $h$ (values are defined recursively).

If you increase $n$ you will eventually exceed the recursion limit of Mathematica.

A small change in how the $w$ values are computed makes it efficient for calculation.

- Computer problem #1, p. 344 (I'm not sure why the authors suggest the step-size control that they do -- they seem to be off by a power of $h$, and a derivative.)

- A modification of that code to do other examples, e.g. Example 8.10, p. 340

- Using successively higher Taylor Methods

- Euler's method is an example of a Taylor method -- it's the first

in a series (we might call it "Taylor-1"). So it's our introduction to

Taylor methods, which we can think of as generalizations of Euler's method.