- Reminder: please keep the phones out of sight and sound.

- Two things Friday:

- Major/Minor lunch: " Please drop-by for lunch between 11:30 and 1:30 in the center atrium. On the menu this week, we have chicken tenders, mac & cheese, chips, and dessert."

- Friday Colloquim: Dr. Pamela Pyzza, Ohio Wesleyan University, will be joining us on Friday at 1pm. She will be giving a talk titled Models of Insect Olfaction in MEP 461.

- You have a homework problem due today: a regression -- by

hand. Please turn that in.

- For Friday: I've asked you and your (Dave) groups to

produce an addendum to your reports for Dave: all the

markings missing from Dave's stick for a 10 foot log,

obtained using a stated equation.

In the original problem I said that you should be able to reproduce any mark on the stick -- now's your chance to show that you can.

- You have a new assignment for Monday: I need you to evaluate yourselves and your groups for the Dave project. See the assignment page for details.

- We finished deriving the linear regression equations, using linear

algebra.

Again, the main advantage of this approach is that it can be easily generalized to models containing many parameters.

- Let's break into our new groups.

Then we'll check the Keeling results using Mathematica and some linear algebra, to see that we get the same fit to Stewart's Keeling data as we did using Mathematica's LinearModelFit.

How would you adjust things to get the quadratic fit? Let's try!

- At the end of that Mathematica file is a set of

diagnostics. Let's talk about those, now that we've

verified that the linear algebra works for determining

the parameters.

- Regression Diagnostics: Polya's "evaluate" step.

There are several things I always consider when creating a regression model:

- Graph the data first, and look for any "outliers"

-- really unusual values. These could be due to mistakes --

coding errors, etc. -- so we want to catch those first (and fix -- or

eliminate -- or live with them, depending on what we think is going

on).

If I see no pattern in the data, then I quit; otherwise, I choose my model and fit it.

- Graphical

check of Fit and Residuals - is there a

pattern to the residuals? If so, we go back to

the model-building step....

- residual "errors" are supposed to be "iid" -- independent and identically distributed (and we hope for normally distributed).

- Hopefully the residuals are not clustered, nor wildly different in size, nor different in appearance along the model.

- if you graph the residuals as a histogram, you get something resembling a normal distribution (bell-shaped curve).

- $R^2$: how much variation does my model explain?

- We break variance down into two parts: a fraction due to the model, and a fraction due to the residual.

- Let's derive this measure from squared "errors" of different types.

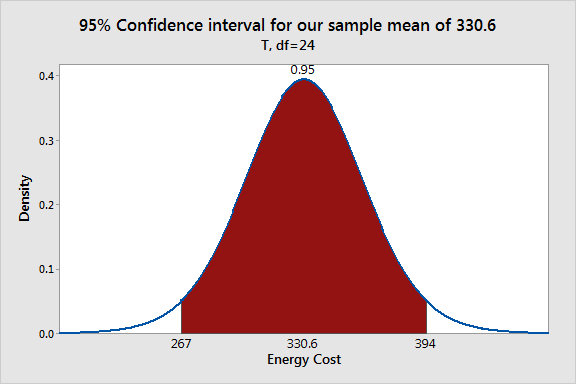

- Confidence intervals for parameters (essentially

equivalent to

p-values, but perhaps more informative).

The standard errors of the parameters pop out of the inverse matrix we compute, multiplied by the mean SSE. Once we have those, we have everything we need for confidence intervals.

We're often interested to know whether we can exclude a certain value from a confidence interval -- e.g., can we conclude that the slope parameter $b$ in a linear regression $y(x)=a+bx$ is not 0? If so, we conclude that there is a non-zero slope, and the model suggests that $x$ drives values up or down, depending on the sign of $b$.

- Eventually we may compare models, using

statistics such as anova, AIC, BIC, etc. For

now, we're going to focus on diagnostics pertaining to a single model.

- Graph the data first, and look for any "outliers"

-- really unusual values. These could be due to mistakes --

coding errors, etc. -- so we want to catch those first (and fix -- or

eliminate -- or live with them, depending on what we think is going

on).

- "The IPCC stands by its equation of 450 ppm = 2 degrees C [of warming]....": For years scientists have said that if atmospheric levels of carbon dioxide reach 450 parts per million (ppm) the planet would heat up by an average of 2 degrees Celsius above pre-industrial levels. They have also said that if the world crosses that threshold, ecosystems worldwide would suffer serious damage.