Section5Calculus Background¶ permalink

There are many aspects of calculus that we need for numerical analysis. My contention is that we tend to motivate ideas in calculus with discrete approximations, then pass to the limit and replace all the discrete stuff with continuous versions.

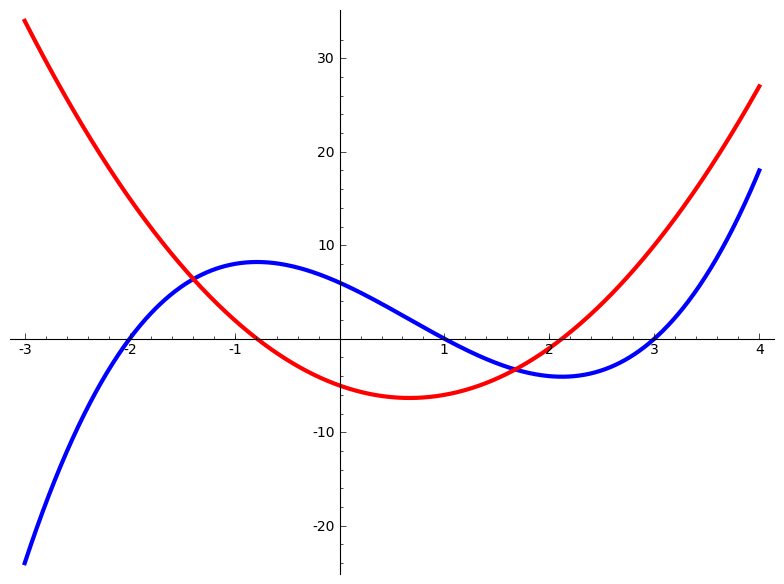

We start with the idea of functions and their derivatives. Hopefully you're

all feeling good about those:

Then, in numerical analysis, we go back and recall all those motivating examples and explain that, actually, that's how all this calculus stuff is computed....:)

Subsection5.1Taylor Series¶ permalink

The basic idea is to replace functions that are sufficiently nice (smooth, differentiable with enough higher derivatives) with polynomials, to give satisfying approximation.

The first case of interest is approximating a function by its tangent line at a particular point. The best linear approximator to a smooth (differentiable) function about the point \(x=a\) is \begin{equation*}f(x)\approx f(a)+(x-a)f'(a).\end{equation*}

The best quadratic approximating a function about the point \(x=a\) is \begin{equation*}f(x)\approx f(a)+(x-a)f'(a)+\frac{(x-a)}{2}f''(a).\end{equation*}

More generally,

What we're doing is matching the polynomial to the function and its derivatives at the point \(x=a\). The polynomials are doing a better job of capturing the behavior of the function \(f\) at that one point (and we hope that the polynomials are also doing a good job of capturing the behavior nearby, as well).

Here is sine, approximated with polynomials about the special point \(x=\frac{\pi}{2}\):

Watch Sage do this one:

Of course we're making an error:

Let Sage show you the actual error on the interval \([0,\frac{\pi}{2}]\):

We do better on the interval \([0,\frac{\pi}{2}]\) (which is all we really need, if you recall) if we expand about \(\frac{\pi}{4}:\)